Averaging vs Partition Averaging

TLDR; This post discusses my NLP research work on representing documents by weighted partition word vectors averaging. (15-minute read)

tags: document representation, partition word vectors averaging

Prologue

Let's consider a corpus (C) with N documents with the corresponding most frequent words vocabulary (V). Figure1 represents the word-vectors space of V, where similar meaning words occur closer to each other (a partition of word vocabulary based on word vector similarity).

Few words are polysemic and belong to multiple topics with some proportion. In figure 1 we represent the topic number of the word in subscript and corresponding proportion in braces.

|

| Figure 1: Corpus C-word vocabulary partitioned using word2vec fuzzy clustering |

Let's consider a text document (d):

"Data journalists deliver data science news to the general public. They often take part in interpreting the data models. Also, they create graphical designs and interview the directors and CEO's."

One can directly average the word vectors of the words to represent the document, as shown below,

|

| Document representation by simple averaging Here, + represents an element-wise vector addition. |

It can be seen that we are averaging word vectors which have very different semantic meaning, e.g., words belonging to partition 1 such as 'graphical', 'design', and 'interpreting' are averaged with different semantic meanings, words of partition 2 such as 'data science', 'model', and 'data'. Also, the document is represented in the same d dimensional space as word vectors.

However, we do an average of word vectors of words within a partition and concatenate the average vector across partitions to represent the document.

However, we do an average of word vectors of words within a partition and concatenate the average vector across partitions to represent the document.

| |

|

Words belonging to different semantic topics are separated by concatenation (⊕) as they represent separate meanings, whereas words coming from the same topics are simple averages since they represent the same meaning. E.g., an average of words belonging to partition 1 such as 'graphical', 'design', and 'interpreting' is concatenated to the average of words of partition 2 such as 'data science', 'model', and 'data'. The final document vector is represented in a higher 5 × d dimension vector space, thus having more representational power, where d is the dimension of word vectors.

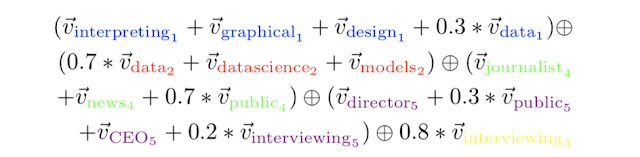

Furthermore, we can do a weighted average of word vectors of words within a partition and concatenate the average vector across partitions to represent the document.

|

|

| Document representation by weighted partition averaging Here, + represents an element-wise vector addition, × represents scalar-vector multiplication, and ⊕ represents concatenation. |

Here, the same words (e.g. 'data,' 'public,' 'interviewing') word-vectors can be average from several partitions depending on its context. The final representation takes the weight to which each word belongs to the various topics into account as well, thus handling the multi-sense nature of words, e.g., words such as data belonging to partition 1 with probability 0.3 and partition 2 with probability 0.7.

Furthermore, we can have context-sensitive word vectors of multi-sense words such that data1 and data2 have different representations depending on the surrounding context.

Ways to partition vocabulary

Let us now look at several ways we can partition word vocabulary utilizing the unsupervised pre-trained word vectors.

- K-Mean Clustering [1] : One can use the k-mean clustering algorithm to cluster words. However, this way of partitioning will ignore the multi-sense (polysemous) nature of words. The partition would be sparse and hence make the intermediate and final representation sparse. K-mean suffers from redundant partition problem (lack partition diversity) due to overfitting to average due to log-likelihood maximization.

- GMM & Sparse GMM Clustering [2,3] : However, if we shift to fuzzy clustering like Gaussian Mixture Model (GMM) we can capture the multisense nature of word vectors at the expense of losing the representation sparsity. However, to achieve the sparsity in the representation one can manually hard threshold word cluster assignments (Sparse-GMM). But the representation still suffers from cluster redundancy.

- Dictionary Learning [4]: To achieve require sparsity, fuzzy nature, and diversity, one can use a dictionary learning algorithm like K-SVD. Dictionary learning is analogous to GMM with an L0 sparsity constraint. K-SVD solves a convex relaxation of the problem with the L1 regularization penalty. Due to sparsity constraints, the partition is sparse and much more diverse (less redundant).

|

| Ways to partition vocabulary |

Ways to represent words

Let's now look at several ways we can represent words by using a vector representation.

- SGNS Unisense Embedding [1,2]: Mikolov et al. word2vec represent each word in a 200-D dimension word vectors in which similar meaning words are closer to each other in vector space.

- Doc2vecC Unisense Embedding [3,4]: Trained similar to word2vec SGNS except with word corruption while representing the context in training. This representation essentially regresses the common component of word vectors which arise due to frequently occurring common words such as stop words like 'and, 'the' and 'to' etc. Since we are also averaging this representation suits out approch.

- Multi-Sense Embedding [3]: One can first annotate corpus words with corresponding sense by looking at the surrounding context and using AdaGram for word sense disambiguation algorithm.

- Contextualized Embedding: With the advancement of generalized Language models such as GPT, UMLFit, Elmo, and BERT for learning contextualized word representation, which automatically capture meaning w.r.t to the context in use (yet to be explored).

|

| Ways to partition words |

Ways to weigh words

There are several ways to weigh each partition or word in partitions.

- Inverse document frequency [1,2,3]: We can directly concatenate the weighted average of IDF of words of documents for each cluster to which they belong. It is well known the direct weighting by multiplication word representation to the corresponding IDF of words performs well.

- Smooth inverse frequency [4]: Arora et al. 2017 show that smooth inverse frequency often weights rare words more smoothly compare to IDF. This weighting performs better than IDF for documents with more rare words.

|

Ways to weigh wordsEfficient Pre-Computation Based Algorithm |

Empirical Results

We tested the hypothesis of partition-based average word embedding representations on various tasks. In this blog post, I would only discuss the text categorization task we perform on the multi-class dataset (20NewsGroup) and multilabel dataset (Reuters). The embedding outperforms many sophisticated approaches involving tensors and some neural network approaches (see other tasks). Furthermore, due to the simplicity of the approach, the time and space complexity of the above representation (especially the sparse ones Sparse GMM and K-SVD) is much lower than other representations. One can, in addition, perform a dimensionality reduction over pre-computed word vectors to reduce representation size without compromising too much over the accuracy. For details on other tasks refer to the papers mentioned in the references.

|

| Multi-Class Classification- 20 NewsGroup – 20 classes, Equal Sampling, 200-300 word documents, Language: English |

|

| Multi-Label Classification- Reuters - ~5000 labels, Unequal Sampling, 400-500 word documents, Language: English |

|

Figure 2: Effect of a varying number of clusters (left), varying word vector dimension (center), and varying sparsity parameter (right) on performance for 20NewsGroup with SCDV [2]

|

|

| Ablation study to show the effect on each choice Learning lower-dimensional manifold |

One can learn a lower-dimensional manifold from sparse word topic vectors. We can empirically show the sparsity helps in effective learning of the manifold. In figure 3 below, we apply random projection on non-sparse word topic vectors vs sparse word-topic-vector (sparse GMM or ksvd). We observe sparse representation helps in learning a better manifold. A similar observation was observed with other common approaches for manifold learning (autoencoders and subspace PCA).

|

| Figure 3: Random Projection on SCDV and SCDV-MS |

Furthermore, we show some nice connections of our embedding with Word Mover Distance and SIF embeddings (refer to [4] for details). Many document representations can be written as kernelized versions of word(local) and global topic(global) embeddings. We also showed that the effect of partition is more important for multi-sentence large documents.

Some results on the Japanese (Livedoor dataset) could be found here. Similar conclusions.

Summary: Instead of using a simple weighted word vector averaging a partition-based weighted average could be a stronger baseline for document representation.

Some results on the Japanese (Livedoor dataset) could be found here. Similar conclusions.

Summary: Instead of using a simple weighted word vector averaging a partition-based weighted average could be a stronger baseline for document representation.

See P-SIF used for image categorization here.

Limitations

- Doesn't account for syntax, grammar, and word order and only focuses on capturing local and global semantics.

- Currently, a disjoint process of partitioning, averaging and learning, can we model everything as a single joint process.

References

[1] Product Classification in E-Commerce using Distributional Semantics; Vivek Gupta, Harish Karnick, Ashendra Bansal, Pradhuman Jhala; Published at COLING 2016. [Paper] [Poster] [PPT]

[2] Sparse Composite Document Vectors using soft clustering over distributional representations, Dheeraj Mekala*, Vivek Gupta*, Bhargavi Paranjape, Harish Karnick; Published at EMNLP 2017. [Paper] [PPT]

[3] Word Polysemy Aware Document Vector Estimation; Vivek Gupta, Ankit Saw, Harshit Gupta, Pegah Nokhiz and Partha Talukdar; Presented at NAACL-SRW 2019 (non-archival). extended version accepted at ECAI 2020. [Paper] [PPT]

[4] Document Embeddings using Partition Averaging; Vivek Gupta, Ankit Saw, Pegah Nokhiz, Praneeth Netrapalli, Piyush Rai, and Partha Talukdar; accepted to appear at AAAI 2020. [Paper] [PPT]

Codes of SCDV representation could be located here.

Codes of SCDV representation could be located here.

Comments

Post a Comment